Kushal Kedia

kk837@cornell.edu

PhD Student

I am a Ph.D. candidate in Computer Science at Cornell University (expected graduation in May 2026), advised by Prof. Sanjiban Choudhury and Prof. Wei-Chiu Ma. Currently, I am a visiting researcher working with Prof. Jeannette Bohg and Prof. C. Karen Liu at Stanford University.

My research goal is to build real-world robot systems that match human capabilities and are able to seamlessly collaborate with human partners. Towards this goal, I work on learning algorithms for robot decision making. I am especially interested in transfer learning from human videos and simulation.

In the past, I completed my undergraduate studies at IIT Kharagpur and have interned at Cruise and Microsoft Research India.

Link to CV.

News

| Oct, 2025 | Gave invited talks on “Moving Beyond Teleoperation: Robot Learning from Human Videos” at the University of Michigan’s Computation HRI course (course link), the Robotics Mobility Team at NVIDIA, and the Foundation Models Team at the RAI Institute. |

|---|---|

| Aug, 2025 | Our paper X-Sim received an oral presentation at CoRL 2025 and a Best Paper (runner-up) award at the EgoAct Workshop at RSS 2025. X-Sim trains real‑world image‑based robot policies entirely in simulation, without any teleoperation data. |

| Apr, 2025 | Cornell Chronicle featured our ICRA 2025 paper on RHyME (Retrieval for Hybrid Imitation under Mismatched Execution): “Robot see, robot do: System learns after watching how-tos.” |

| Oct, 2024 | Gave invited talks on “Transferring Collaborative Behaviors from Human-Human Teams” at RPM Lab, University of Minnesota and RobIn Lab, UT Austin. |

| May, 2024 | Released MOSAIC, a year-long collaborative effort combining multiple foundation models to build a multi-robot collaborative cooking system. MOSAIC won the Best Paper award at the VLMNM workshop and the Best Poster at the MoMa workshop @ ICRA 2024! |

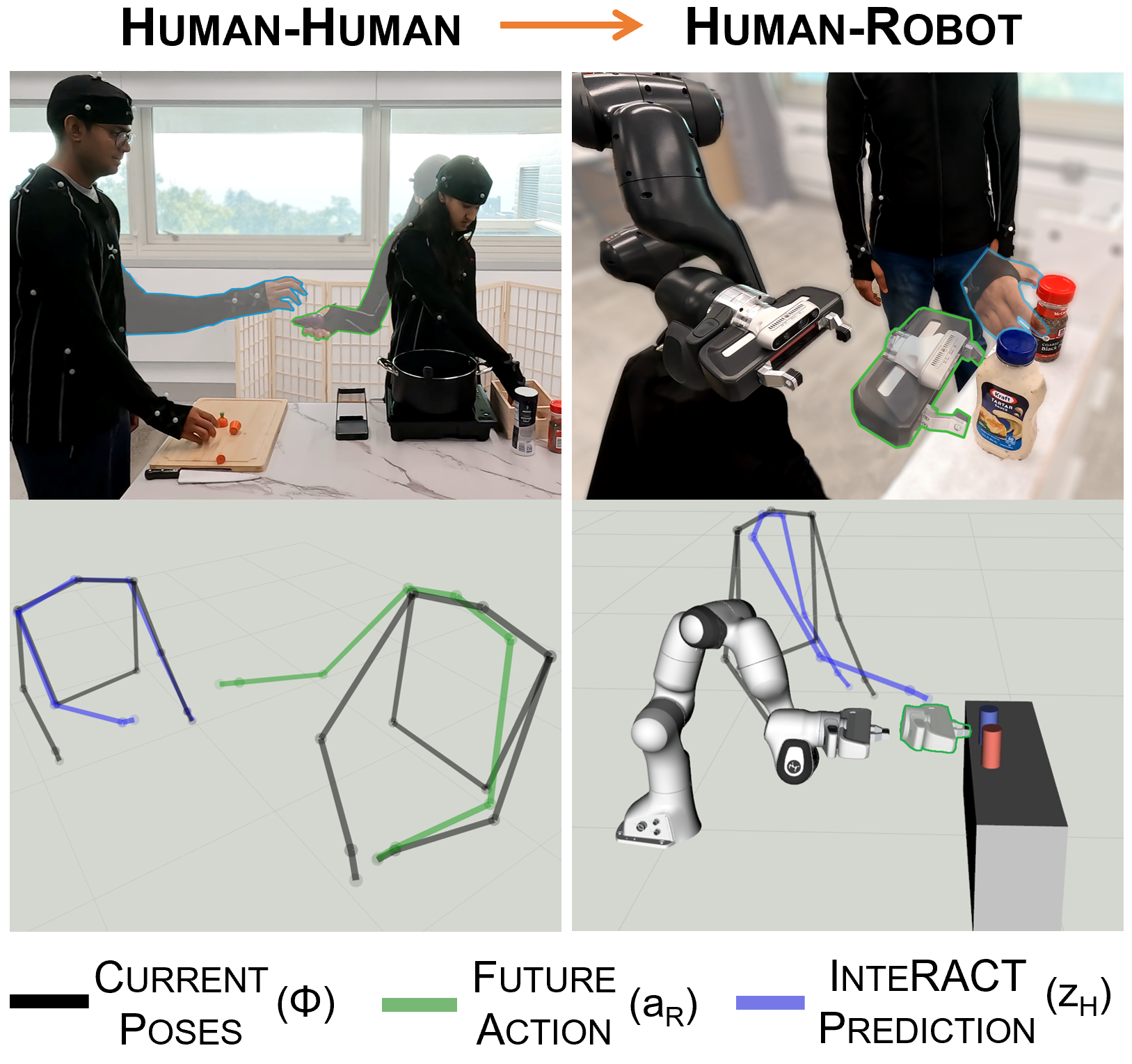

| Sep, 2023 | Excited to release the Collaborative Manipulation Dataset (CoMaD)! CoMaD captures over 6 hours of motion data from 14 unique users involving both human-human and human-robot (Franka robot arm) interactions in a kitchen setting. |

Selected Papers

-

SimToolReal: An Object-Centric Policy for Zero-Shot Dexterous Tool ManipulationArXiv

SimToolReal: An Object-Centric Policy for Zero-Shot Dexterous Tool ManipulationArXiv -

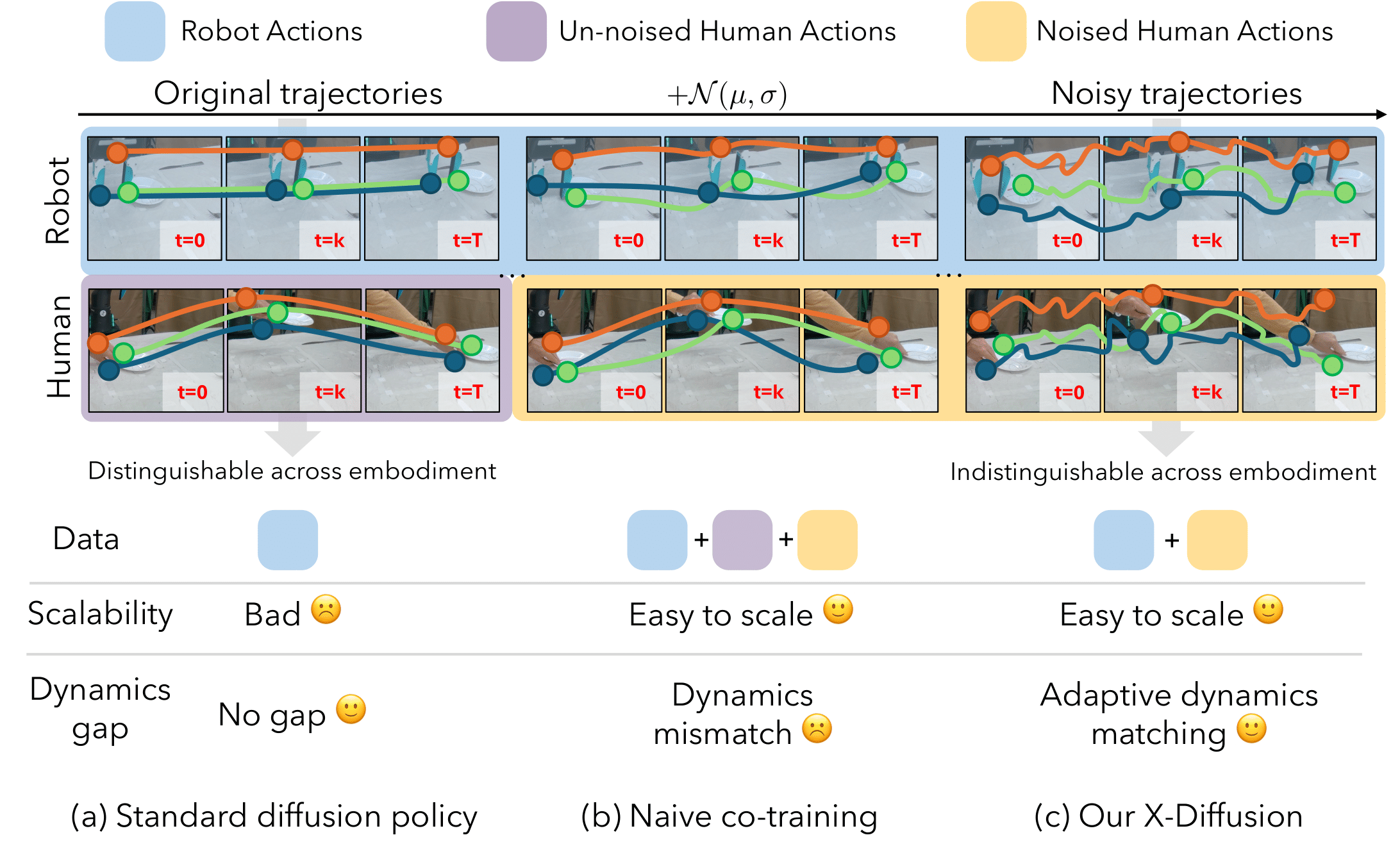

X-Diffusion: Training Diffusion Policies on Cross-Embodiment Human DemonstrationsICRA 2026

X-Diffusion: Training Diffusion Policies on Cross-Embodiment Human DemonstrationsICRA 2026 -

X-Sim: Cross-Embodiment Learning via Real-to-Sim-to-RealOral Presentation @ CoRL 2025

X-Sim: Cross-Embodiment Learning via Real-to-Sim-to-RealOral Presentation @ CoRL 2025

Best Paper (Runner-Up) @ EgoAct Workshop, RSS 2025 -

-

MOSAIC: A Modular System for Assistive and Interactive CookingCoRL 2024

MOSAIC: A Modular System for Assistive and Interactive CookingCoRL 2024

Best Paper @ VLNMN Workshop, ICRA 2024

Best Poster @ MoMa Workshop, ICRA 2024 -

InteRACT: Transformer Models for Human Intent Prediction Conditioned on Robot ActionsICRA 2024

InteRACT: Transformer Models for Human Intent Prediction Conditioned on Robot ActionsICRA 2024 -

ManiCast: Collaborative Manipulation with Cost-Aware Human ForecastingCoRL 2023

ManiCast: Collaborative Manipulation with Cost-Aware Human ForecastingCoRL 2023 -